Came across an interesting situation this morning and thought I would drop the solution I found here incase anyone else needs to figure this out.

Situation:

- Active Driectory Domain Controller in an Azure VM

- Your admin account has an expired password

- RDP’ing to the machine says your password is expired and you need to set a new one, but it keeps prompting you around in the circle that you need to udpate it … but you can’t.

The first thing you will likely try is the Reset Password option in the Azure portal. It doesnt work for Domain Controllers (this changed recently … no idea why). You get an error message that says:

VMAccess Extension does not support Domain Controller

At this point you start trying to figure out if there is another admin account you can use to log in with. In my case this as a dev/test AD box and it only had the one admin account on it.

Solution:

Before you go and delete the VM and build up a new one I found an interesting way to fix this.

Updated 5/20/2021: The new way to run PowerShell via the admin portal makes things really simple.

- Go to the VM in the azure portal

- Click Run Command in the left hand navigation

- Choose “RunPowerShellScript” from the options

- Paste in the following PowerShell (obviously replace the username and password you want to set)

net user <YouAdminUserName> <YourNewPassword>

Then click Run and let the script run for a while and when it is complete your password will be reset correctly.

Old way to do it:

You can use the azure portal and a VM extension to upload and run a script on the machine to reset the password for you. Here is how you do it.

- Create a script called “ResetPassword.ps1”

- Add one line to that script

net user <YouAdminUserName> <YourNewPassword>

- Go to the VM in the azure portal

- Go into the extensions menu for that VM

- In the top mentu pick “Add”

- Choose the Custom Script extension

- Click Create

- Pick your ResetPassword.ps1 script file

- Ok

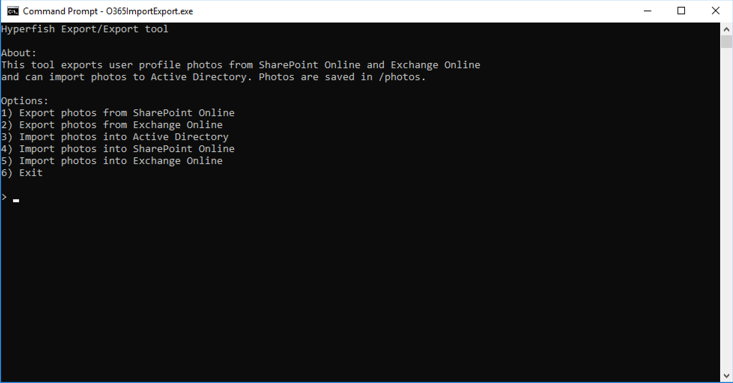

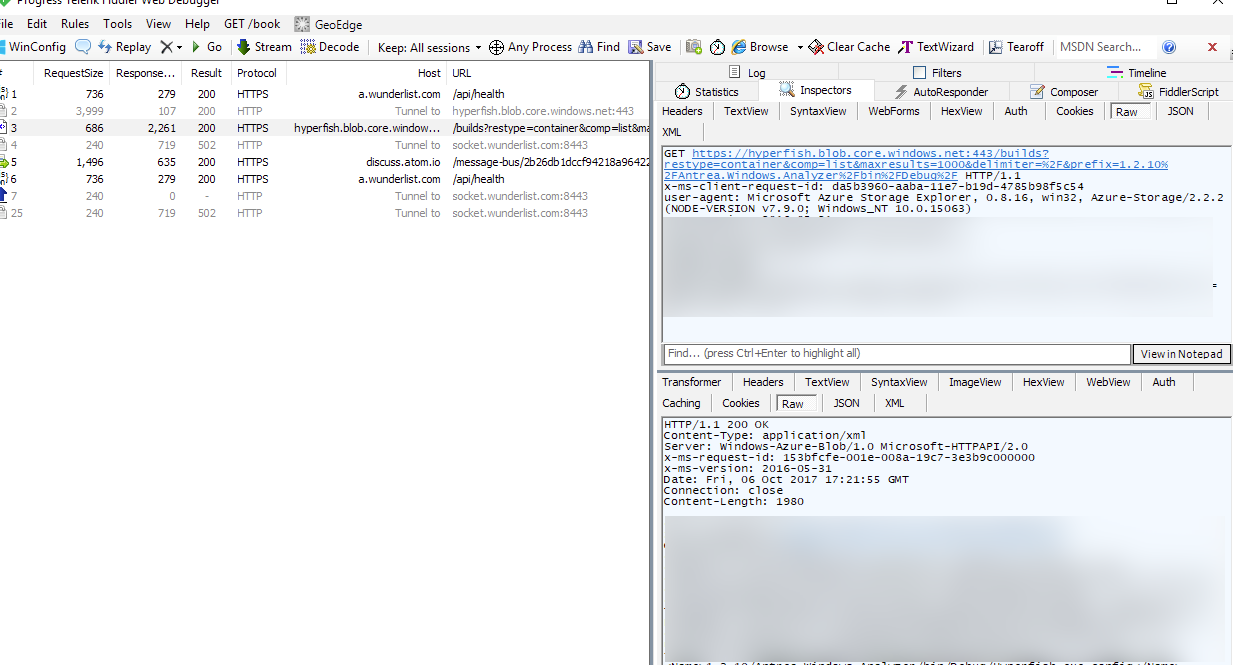

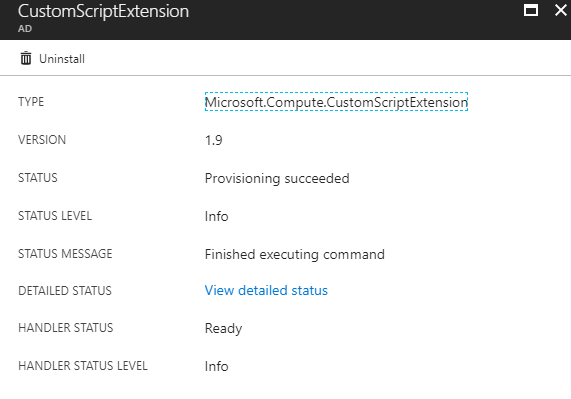

Wait for the extension to be deployed and run. After a while you will see the status that looks something like this:

You should be set to RDP into your machine again with the new password you set in the script file.

I have no idea why the reset password functionality in Azure decided to exclude AD DCs … but if you get stuck i hope this helps.

-CJ