One of the tips I gave to during my session at TechEd North America this year was about using SignalR in your SharePoint provided hosted applications in Azure.

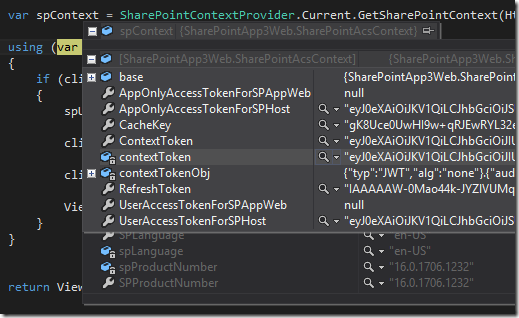

One of the pain points for developers and people creating provider hosted apps is monitoring them when they are running in the cloud. This might be just to see what is happening in them, or it might be to assist with debugging an issue or bug.

SignalR has helped me A LOT with this. It’s a super simple to use real time messaging framework. In a nutshell it’s a set of libraries that let you send and receive messages in code, be that in JavaScript or .Net code.

So how do I use it in SharePoint provider hosted apps in Azure to help me monitor and debug?

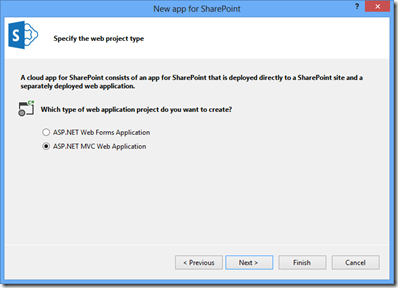

A SharePoint Provider Hosted App is essentially a web site that provides parts of your app that surface in SharePoint through App Parts or App Pages etc… It’s a set of pages that can contain code behind them as any regular site does. It’s THAT code that runs that I typically want to monitor while its running in the Azure (or anywhere for that matter).

So how does this work with SignalR?

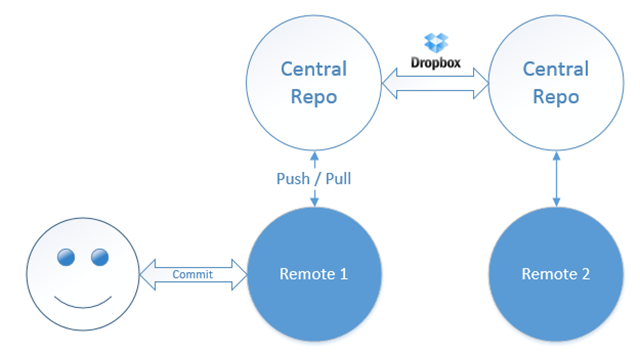

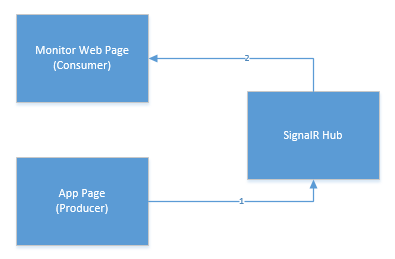

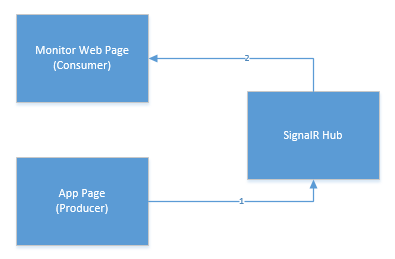

SignalR has the concept of Hubs that clients “subscribe” to and producers of messages “Publish” to. In the diagram below App Pages code publish or produce messages (such as “there was a problem doing XYZ”) and consumers listen to a Hub and receive messages when they are published.

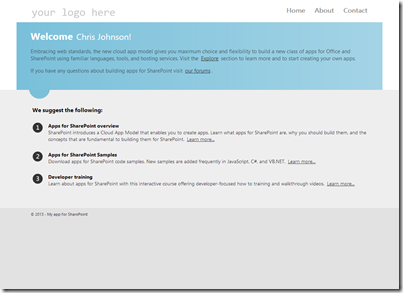

In the example I gave at TechEd I showed a SharePoint Provider Hosted App deployed in Azure that Published messages whenever anyone hit a page in my app. I also created a “Monitor.aspx” page that listened to the Hub for those messages from JavaScript and simply wrote them to the page in real-time.

How do you get this working? It’s pretty easy.

Part 1: Setting up a Hub and publishing messages

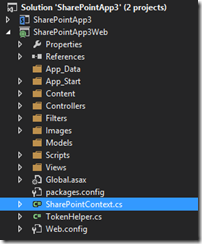

First add SignalR to your SharePoint Provider Hosted app project from Nuget. Click the image below for a bigger version showing the libraries to add.

Then in your Global.asax.cs you need to add a Application_OnStart like this. It registers SignalR and maps the hub urls correctly.

protected void Application_Start(object sender, EventArgs e)

{

// Register the default hubs route: ~/signalr

RouteTable.Routes.MapHubs();

}

Note: You might not have a Global.asax file in which case you will need to add one to your project.

Then you need to create a Hub to publish messages to and receive them from. You do this with a new class that inherts from Hub like this:

public class DebugMonitor : Hub

{

public void Send(string message)

{

Clients.All.addMessage(message);

}

}

This provides a single method called Send that any code in your SharePoint Provider Hosted app can call when it wants to send a message. I wrapped this code up in a short helper class called TraceCaster like this:

public class TraceCaster

{

private static IHubContext context = GlobalHost.ConnectionManager.GetHubContext<DebugMonitor>();

public static void Cast(string message)

{

context.Clients.All.addMessage(message);

}

}

This gets a reference to the Hub called “context” and then uses that in the Cast method to publish the message. In code i can then send a message by calling:

TraceCaster.Cast(“Hello World!”);

That is all there is to publishing/sending a simple message to your Hub.

Now the fun part … receiving them 🙂

Part 2: Listening for messages

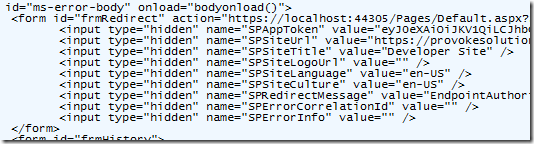

In my app I created a new page called Monitor.aspx. It has no code behind, just client side JavaScript. In that code it first references some JS script files: JQuery, SignalR and then the generic Hubs endpoint that SignalR listens on.

<script src=”/Scripts/jquery-1.7.1.min.js” type=”text/javascript”></script>

<script src=”/Scripts/jquery.signalR-1.1.1.min.js” type=”text/javascript”></script>

<script src=”/signalr/hubs” type=”text/javascript”></script>

When the page loads you want some JavaScript that starts listening to the Hub registers a function “addMessage” that is called when the message is sent from the server.

$(function () {

// Proxy created on the fly

var chat = $.connection.debugMonitor;

// Declare a function on the chat hub so the server can invoke it

chat.client.addMessage = function (message) {

var now = new Date();

var dtstr = now.format(“isoDateTime”);

$(‘#messages’).append(‘[‘ + dtstr + ‘] – ‘ + message + ‘<br/>’);

};

// Start the connection

$.connection.hub.start().done(function () {

$(“#broadcast”).click(function () {

// Call the chat method on the server

chat.server.send($(‘#msg’).val());

});

});

});

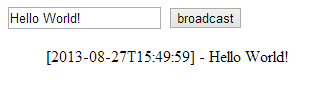

This code uses the connection.hub.start() function to start listening to messages from Hub. When a message is sent the addMessage function is fired and we can do whatever we like with it. In this case it simply adds it to an element on the page.

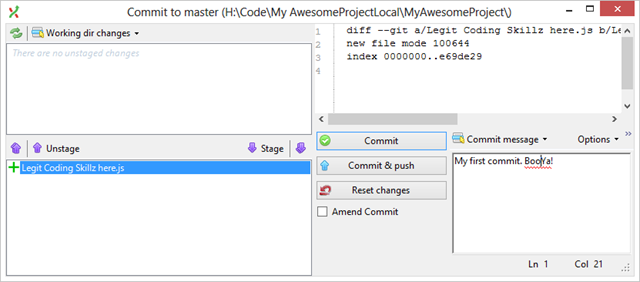

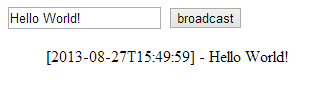

All going well when you are running your app you will be able to open up Monitor.aspx and see messages like this flowing:

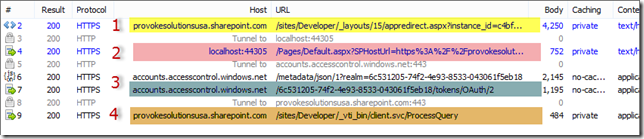

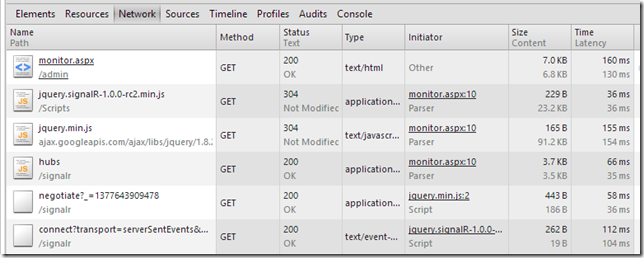

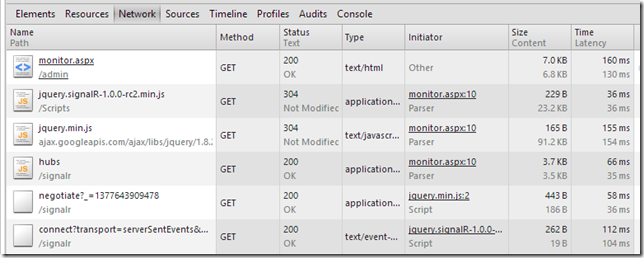

If you don’t see messages flowing you probably have a setup problem with SignalR. The most common thing I found when setting this up was the client not being able to correctly reference the SignalR JS or Hub on the server. Use the developer tools in IE or Chrome (or Fiddler) to check that the calls being made to the server are working correctly (see below for what working should look like):

If you are sitting there thinking “What if I am not listening for messages? What happens to them?” I hear you say! Well, unless someone is listening for the messages they go away. They are not stored. This is a real-time monitoring solution. Think of it as a window into listening what’s going on in your SharePoint Provider Hosted app.

There are client libraries for .Net, JS, iOS, Android too. So you can publish and listen for messages on all sorts of platforms. Another application i have used this on is for simple real time communication between Web Roles in Azure and Web Sites in Azure. SignalR can use the Azure Service Bus to assist with this and its pretty simple to set up.

Summary

I’m an developer from way back when debugging meant printf. Call me ancient but I like being able to see what is going on in my code in real time. It just gives me a level of confidence that things are working the way they should.

SignalR coupled with SharePoint Provided Hosted Apps in Azure are a great combination. It doesn’t provide a solution for long term application logging, but it does provide a great little realtime windows into your app that I personally love.

If you want to learn more about SignalR then I suggest you take a look at http://www.asp.net/signalr where you will find documentation and videos on other uses for SignalR. It’s very cool.

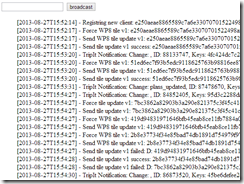

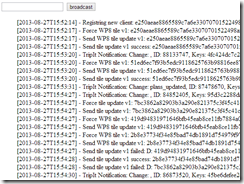

Do I use it in production? You bet! I use it in the backend of my Windows Phone and Winodows 8 application called My Trips as well as in SharePoint Provider Hosted Apps in Azure. Here is a screen shot from the My Trips monitoring page, I can watch activity for various devices registering with my service etc…

Happy Apping…

-CJ